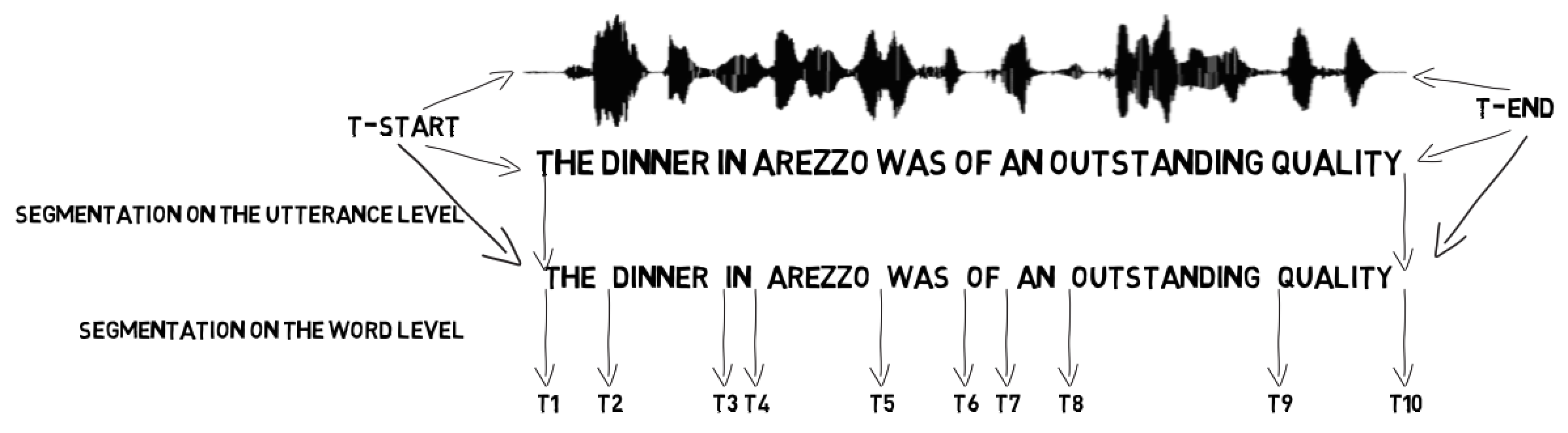

Forced Aligment is a kind of Automatic Speech Recognition but it differs in one major respect. Rather than being given a set of possible words to recognise, the recognition engine is given an exact transcription of what is being spoken in the speech data. The system then aligns the transcribed data with the speech data, identifying which time segments in the speech data correspond best to particular words in the transcription data.

Below, an explanation of what it is and how it works. For a description of "how to align your text with audio with the help of software (i.e. WebMAUS)", see under Tools.

General

Forced Alignment (General)

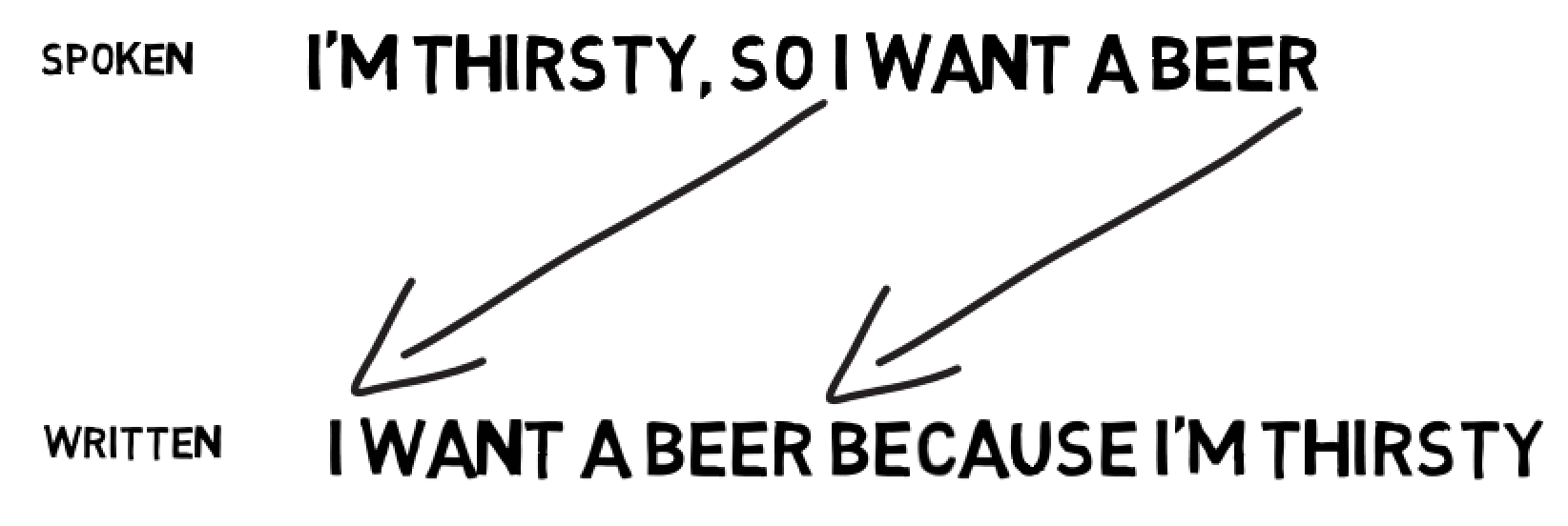

Difference between spoken and written text

Ideally spoken, the written text to align with the audio is an exact copy of what was said (see image aboove). But often, transcriptions are a corrected, interpreted version of what was said.

If some says "If I recognize well, I was, eh I mean, we went to the store, eh to the grossary store" often it will be transcribed as "If I recognize well, we went to the grossary store". All hesitations, internal corrections of the speaker and repititions are skipped. The result is a grammatically correct version of what was meant, but it does differ from what was said. Forced Alignemnt (FA) can handle this kind of deviations between written and spoken content as long as the difference is not too big.

It becomes more different if parts of the written text has been switched like:

If the spoken "I want a beer" part is correctly matched on the written version, the part before "I want a beer" has no text en the written part (because I'm thirsty" has nog time. For searching this isn't a big problem, but if the aligned text will be used for subtitling, some hand-made correction will be necessary.

If the spoken "I want a beer" part is correctly matched on the written version, the part before "I want a beer" has no text en the written part (because I'm thirsty" has nog time. For searching this isn't a big problem, but if the aligned text will be used for subtitling, some hand-made correction will be necessary.

Unknown words and Phonetic Transcriptions

A problem may arise if the text (and audio) contain foreign words, abbreviations and (large) numbers. In order to align, the written text is transformed into phonemes according the language setting. But how to deal with words that are strange for the target language? Suppose you have a written phrase like "Mrs Uytendenboogaard and Mr. Chukwuemeka have a boat trip on the Bhagirathi River". This phrase combines a Dutch and a Nigerian family name with the name of an Idian river. What will be the correct pronouncation?

The first step in each FA-proces, is the transformation (= phonetic transcription) from words to phonemes. Then, these phonemes will be matched with the spoken content. This means that the phonetic transcription is crucial but this transcription is language-dependend! E.g. the word boat will be transcribed as /b o: t/ when you use the English G2P (= Grapheme-to-Phoneme) converter. But if you use the Dutch G2P, the phonetic transcriptin will be /b o: A t/.

If we pass the example sentence in a G2P, it will result in:

| TXT | Mrs Uytendenboogaard and Mr. Chukwuemeka have a boat trip on the Bhagirathi River |

| UK | mIs@z uytendenboogaard {nd mIst@r chukwuemeka h{v @ bot trIp An D@ bhagirathi rIv@r>; |

| NL | Em Er Es Ytd@nboxart And Em Er XukwuEm@ka hav@ a boAt trIp On d@ bagirati riv@r |

The transcription was done with an automatic G2P for English and Dutch. The blue words mean: "No idea how to pronounce it". The Dutch G2P decided to spell the unknown words (so Mr. becomes Em Er). The difference between these two transcriptions is huge and the Foreced Alignements with the two transcription may significantly differ as well.

This small example showes that the phonetic transcription, crucial for correct Foreced Alignment, is less naturally than often expected. Abbreviations and unknown/foreign words may ruin the alignment. A good FA-system must therefore interact with the user about the phonetic transcription: presenting the user the best guess of initially unknown words. The user may enter the phonetic transcription of the words (using the phonetic alphabet) or a "sound-as" word ("Bhagirathi" sounds as "bakirati"). Once the initially unknown word is transcribed by the user, it may entered into a phonetic dictionary for further use.

Audio quality

The quality of the audio-signal (i.e. the speech sound) may influence the accuracy of the alignment. The better the audio (less or no background noise)

Tools

Forced Alignment (Tools)

A special version of Automatic Speech Recognition (ASR) is Forced Alignment. With ASR, the software has to determine which of the ≈264K words was spoken. ASR uses the acoustic features in combination with the language model to estimate the words spoken. As input, ASR requires the audio file only.

Forced Aligment works a bit different because it is presupposes that it is known before what was said. The only thing the software has to figure out, is the timing information: when did the word start and when did it stop. To do so, the software needs the audio-file and the transcription-file.

A more elaborated explanation, with some phonetic examples, can be found at technology/forced-alignment.

WebMAUS

A very useful service to align audio and text is the CLARIN WebMAUS-basic service of the Phonetic department of the LMU (München). The service needs two files: the audio-file and an ASCII/UTF-8 text file, containing the transcription.

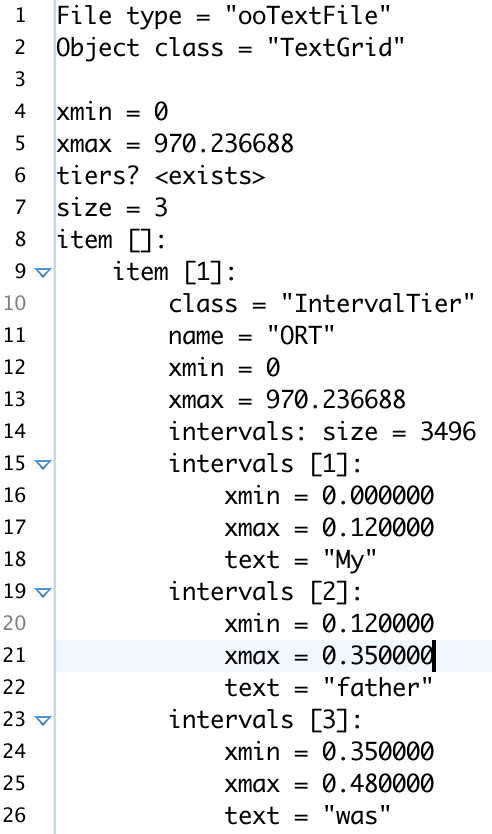

Fig. 1: The result of forced alignment with WebMAUS; a TextGrid-file with only the start and end-times of each aligned word.: The result of the WebMAUS forced-alignment, is a TextGrid-file (a format you can use in PRAAT, see fig. 1 on the right) that contains both an orthographic output (the start and end times of the words in the transcription-file) and an phonetic output (the start and end times of the phonemes of the words).

Fig. 1: The result of forced alignment with WebMAUS; a TextGrid-file with only the start and end-times of each aligned word.: The result of the WebMAUS forced-alignment, is a TextGrid-file (a format you can use in PRAAT, see fig. 1 on the right) that contains both an orthographic output (the start and end times of the words in the transcription-file) and an phonetic output (the start and end times of the phonemes of the words).

For Oral History, the orthographic output is enough.

WebMAUS has more then 36 languages to choose from. In case a specific language is not available, the software works well with a language that is (acoustically) close to the spoken language. For example, we have forced aligned a Russian spoken interview with Dutch as the selected language ? .

Problems

There are a couple of issues when starting to align the transcriptions. The main issue are the "abbreviations": is the difference between the way a word is written and how it is pronounced by the majority of the native speakers.

Abbreviations

Easy problems are words without a vowel: the are spelled out or "replaced" by the original word.

- NCRV → /E n - s e - E r - v e/ (A Ducth broadcast organisation)

- Mr → Mister → /M I s - t @ r/

If words contain one or more vowels, it depends on the local attitudes.

- NOS → N. O. S. → /E n - o - E s/ (the national Dutch broadcast organisation). NOS can be pronounced as nos /n O s/, but no one does it.

- RAI→ /r Ai/ (the national Italian broadcast organisation). The word is perfectly pronouncable in Italian, so no one is using the spell-mode.

Fig. 2: An example of the two pronouncations of dr in one streetname.A particular kind of abbreviations are those that depends of the context.

Fig. 2: An example of the two pronouncations of dr in one streetname.A particular kind of abbreviations are those that depends of the context.

- An appointment with dr. Corti → an appointment with doctor Corti

- An appointment on the Corti dr. → an appointment on the Corti drive

Numbers

A special kind of abbreviations are numbers. A normal number like 19 → sounds as nineteen → /n Ai n - t i n/. But numbers are context sensitive as well.

- my phone number is 621888146 → 6 2 1 8 8 8 (or 3 times 8) 1 4 6

- the cost in euro of that bridge is 621888146 → sixhundred twenty one million etc.

So, before using the G2P, one needs to preprocess the text in order to know what the most likely way of pronouncation will be.

Human transcriptions

When a transcription is made by experienced transcribers, one may expect that these kind of "problems" are (partly) solved by the way the speech is orthographically transcribed. So if someone say "the doctor Luther King drive" you may hope that it is not transcribed as "the dr. Luther King dr.". In general, it is preferable when transcribers do not use abbreviations or numbers, but for practical reasons (speed, out of habit) they do ?.

So, transcribers will probably use numbers as shows the transcription example in fig. 3 below. In order to align the text with the audio however, WebMAUS first rewrites abbreviations and numbers into the full-words (Mr → Mister, 19 → nineteen). This means that the transcription you present and the resulting TextGrid-file differ on abbreviations and numbers.

[Input WebMAUS] "Mr Arjan is 19 years old" → [Output WebMAUS] "Mister Arjan is nineteen years old".

Speaker segmentation

Another issue is the speaker segmentation. In many human-made transcriptions, the speakers are annotated with their name or role, followed by a column ("John: I was working in London, when....." or "Int: I was working in London, when...")

The words John or Int are not spoken, so they have to be removed before aligning the audio with the text. But this means that all information about who-said-what, is removed as well.

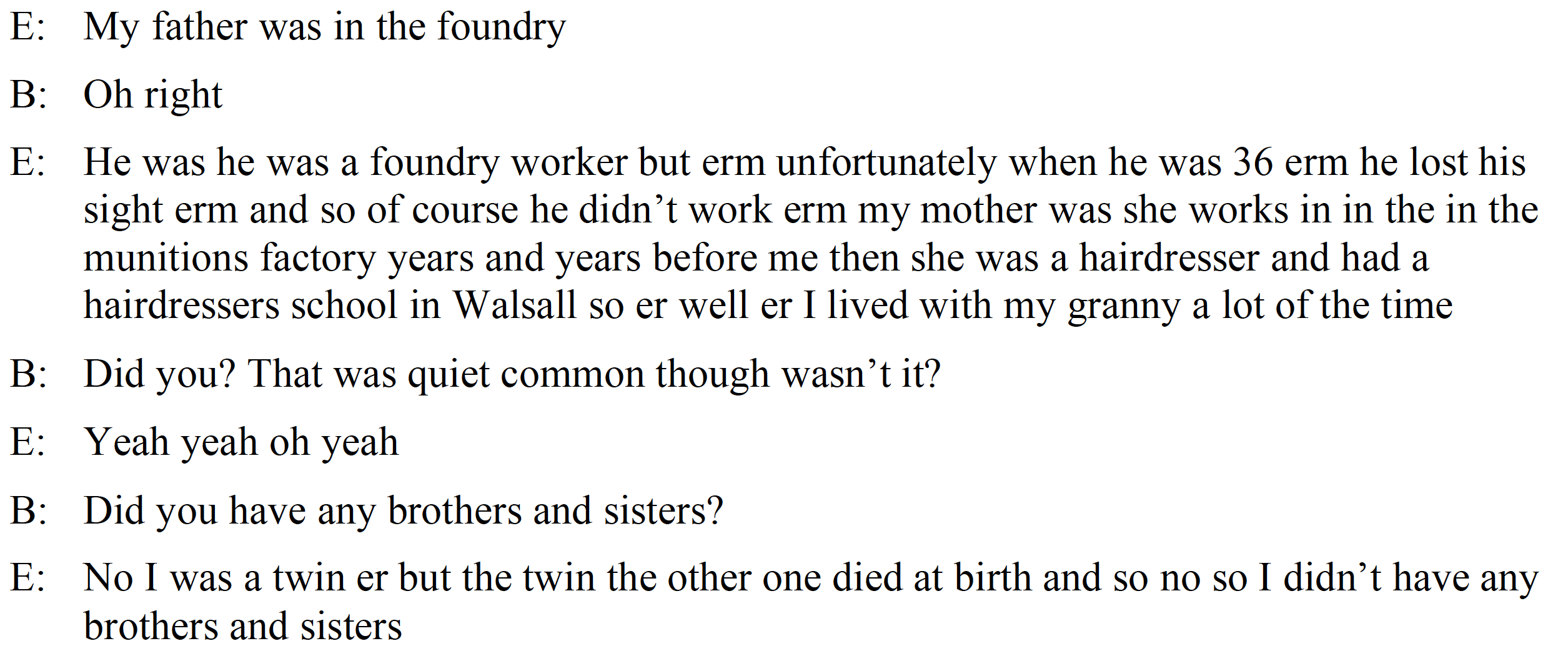

Fig. 3: A screenshot of an original English transcript with speaker segmentation and punctuations.

Fig. 3: A screenshot of an original English transcript with speaker segmentation and punctuations.

Courtesy Maureen Haaker, Essex University

So, the end-result of forced alignment by WebMAUS, is one string of words, independent of who was speaking and without dots, question marks, commas and other punctuations.

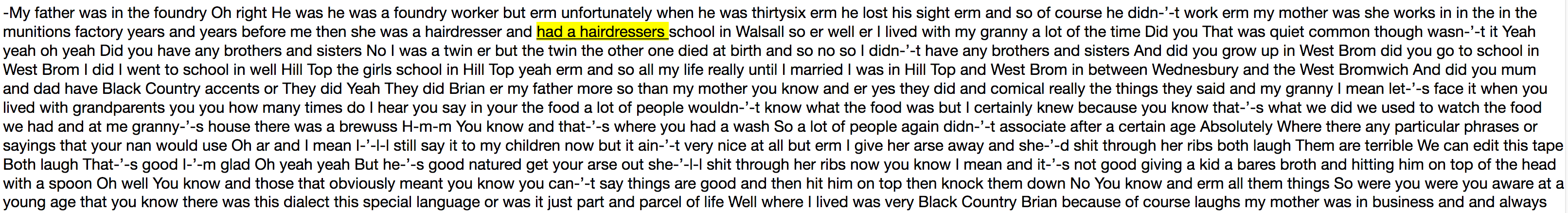

Fig. 4: Example of the Forced Alignment with WebMAUS. The original transcript with speaker segmentation and punctuations (see fig. 3 above), is "transformed" into one string of adjacent words.The yellow selection was the word spoken when the screenshot was made.

Fig. 4: Example of the Forced Alignment with WebMAUS. The original transcript with speaker segmentation and punctuations (see fig. 3 above), is "transformed" into one string of adjacent words.The yellow selection was the word spoken when the screenshot was made.

The number 36 in the original transcription (third paragraph)was rewritten into thirtysix in the aligned output (first row).

Solution

Cleaning the transcription

The original transcription (aka the word-file), contains all the required information: the words, the speakers, the punctuations and the non-verbal utterances as can be seen in the screenshots of the English (Fig. 3 above) and Italian (Fig. 7 below) transcripts.The required input for WebMAUS, is a plain ASCII/UTF-8 text file without all information other than the spoken words. So, the first step is to save the MS-word transcription files in Text-only UTF-8. The easiest way to do so, is to do it by hand from within the Word-application (save-as..).

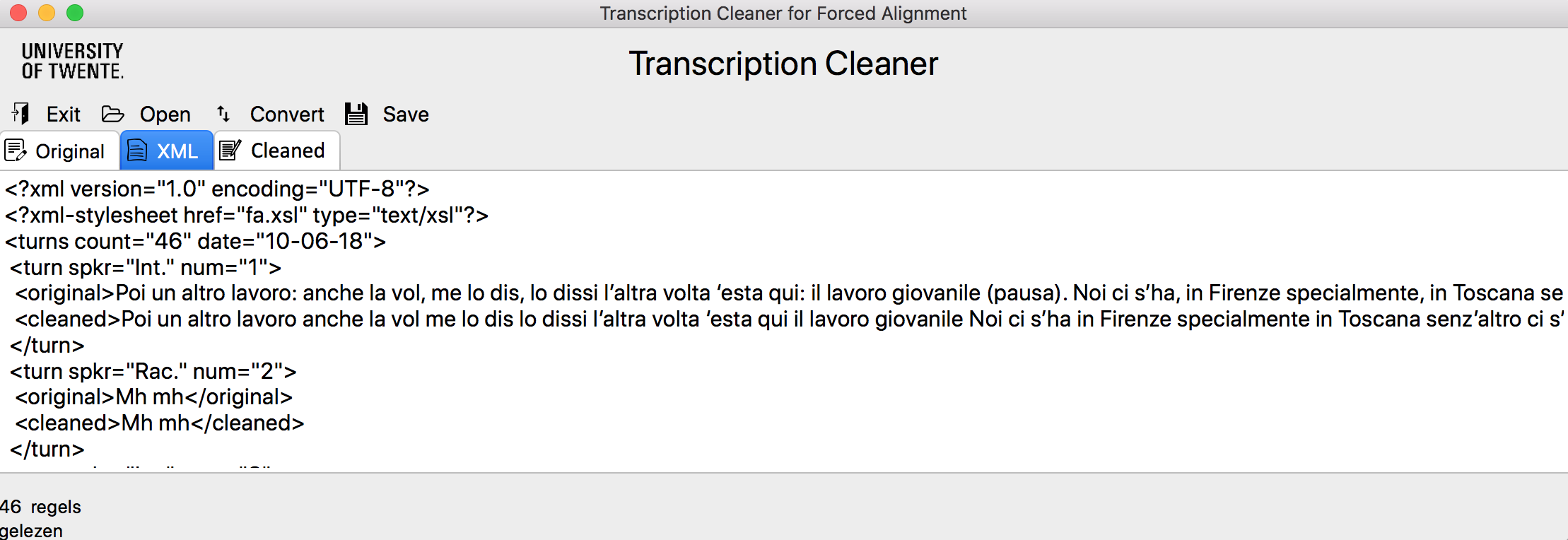

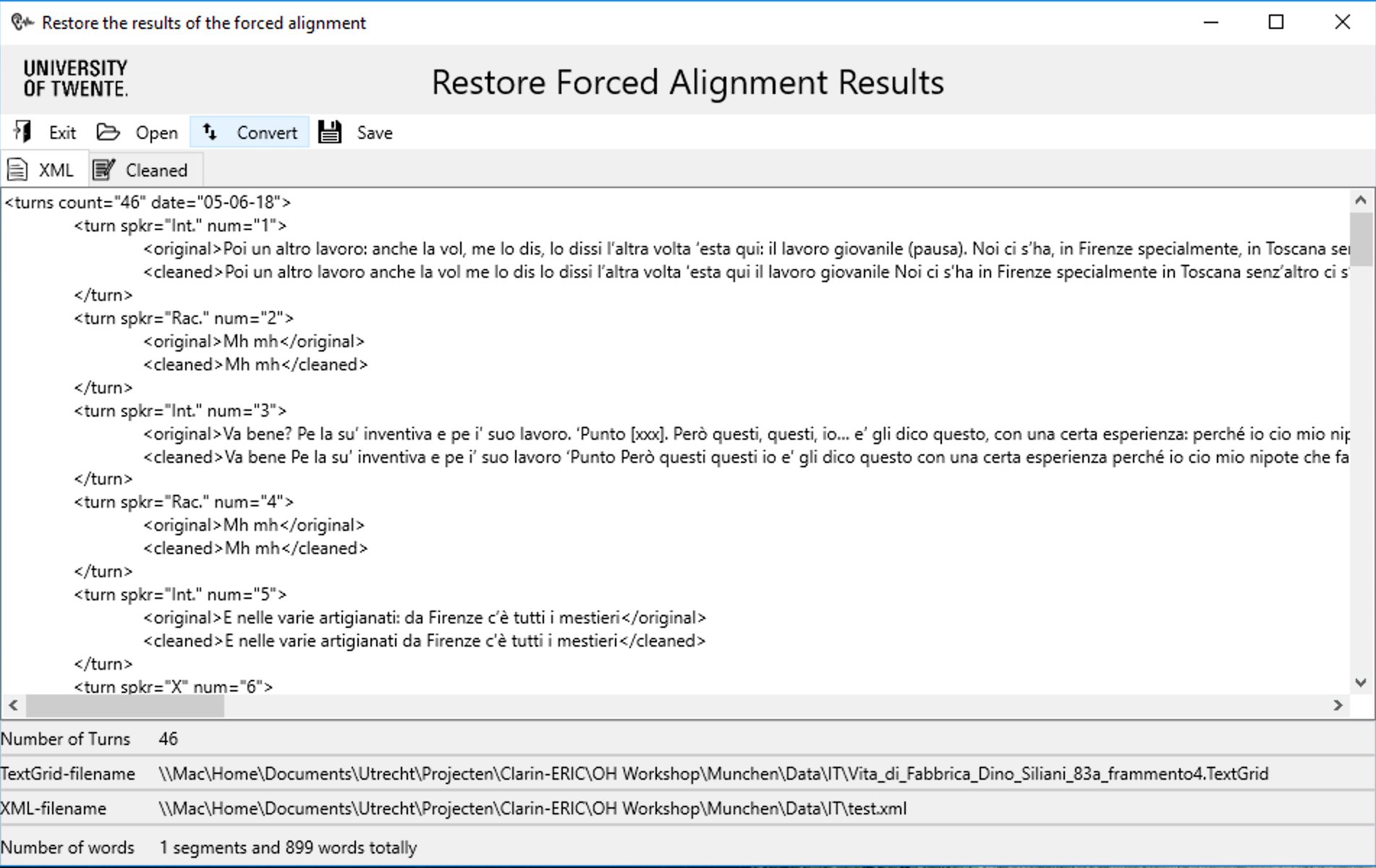

Once the UTF-8 files are available, the can be read with Transcription Cleaner, a small Windows/OSX application that allows the user to select the transcription text and saves it in a XML-file with turn's containing the speaker as an attribute and the <original> and <cleaned> text as two text-elements (see Fig. 5 below).

Fig. 5: the output of the Trascription Cleaner app with turns containing the speaker-ID, the original text and the cleaned text. The cleaned text is equal to the original text but without punctuations, non-verbal utterances like (pausa) or [xxx] (i.e. not understandable).

Besides this XML-file, the program saves the cleaned text-elements as an UTF-8 text-file with just the cleaned text. This cleaned text-file can be used for the WebMAUS forced alignment.

Aligning the FA-result with the non-cleaned transcription

The results of the Forced Alignment (FA) by WebMAUS is a TextGrid-file (as can be seen in Fig. 1 above) with just the aligned words with their start and end-times. In another program these WebMAUS results and the cleaned text are 'aligned' resulting in an aligment result that includes the speaker-ID. The next months we will try to clue the WebMAUS-results not only to the cleaned text, but to the original text. If this can be done, it will result in the original transcription including the beginning and end of each transcribed word.

Fig. 6: The app to 'glue' the cleaned text (including speaker-ID) with the results of the forced alignment of WebMAUS.

Fig. 6: The app to 'glue' the cleaned text (including speaker-ID) with the results of the forced alignment of WebMAUS.

Example

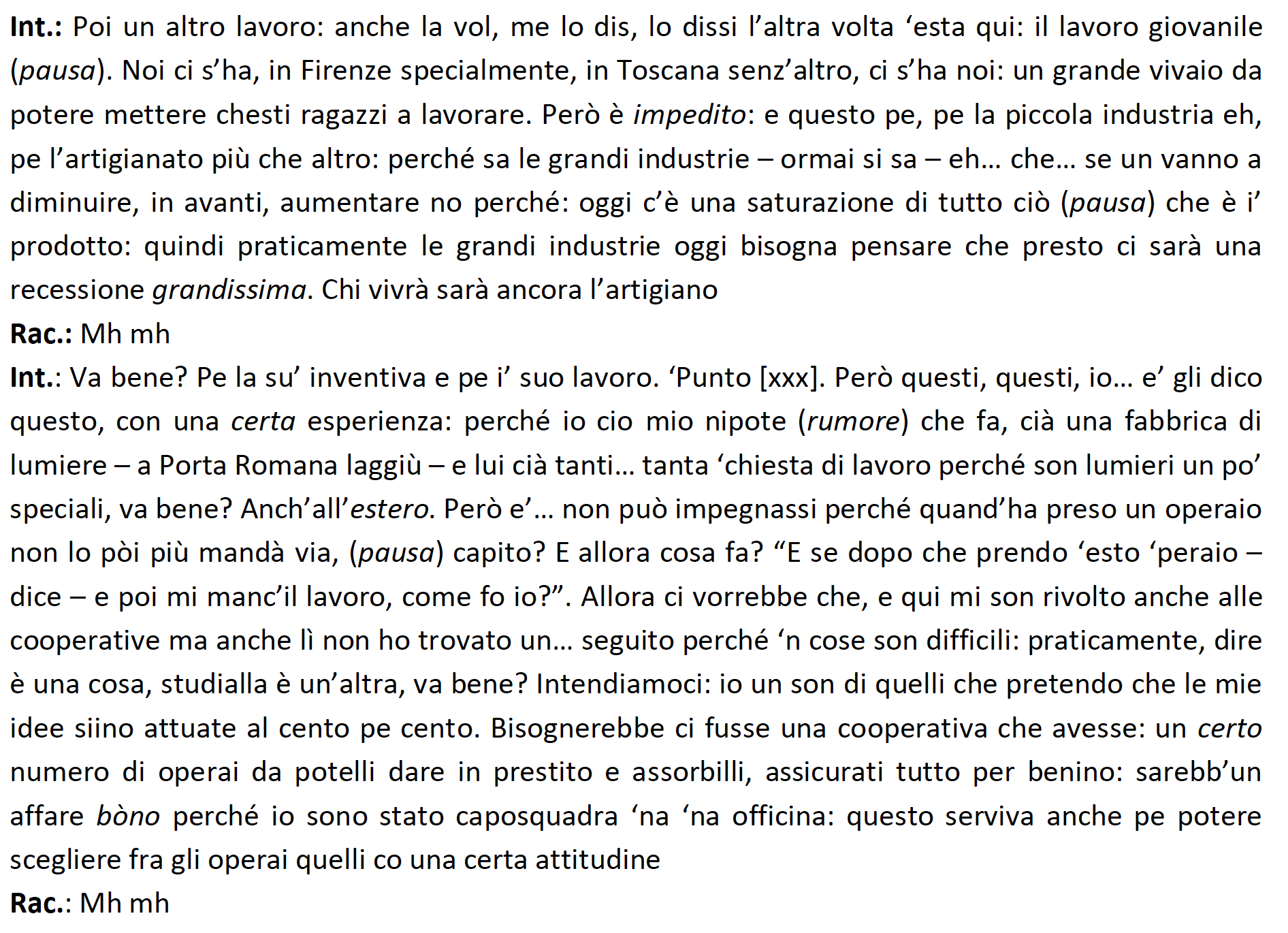

We have tried to do so for some Italian transcripts. We succeed due to the fact that in the original Italian transcriptions were rather strait-forward and did not contain abbreviations or numbers (for the explanation, see below) .

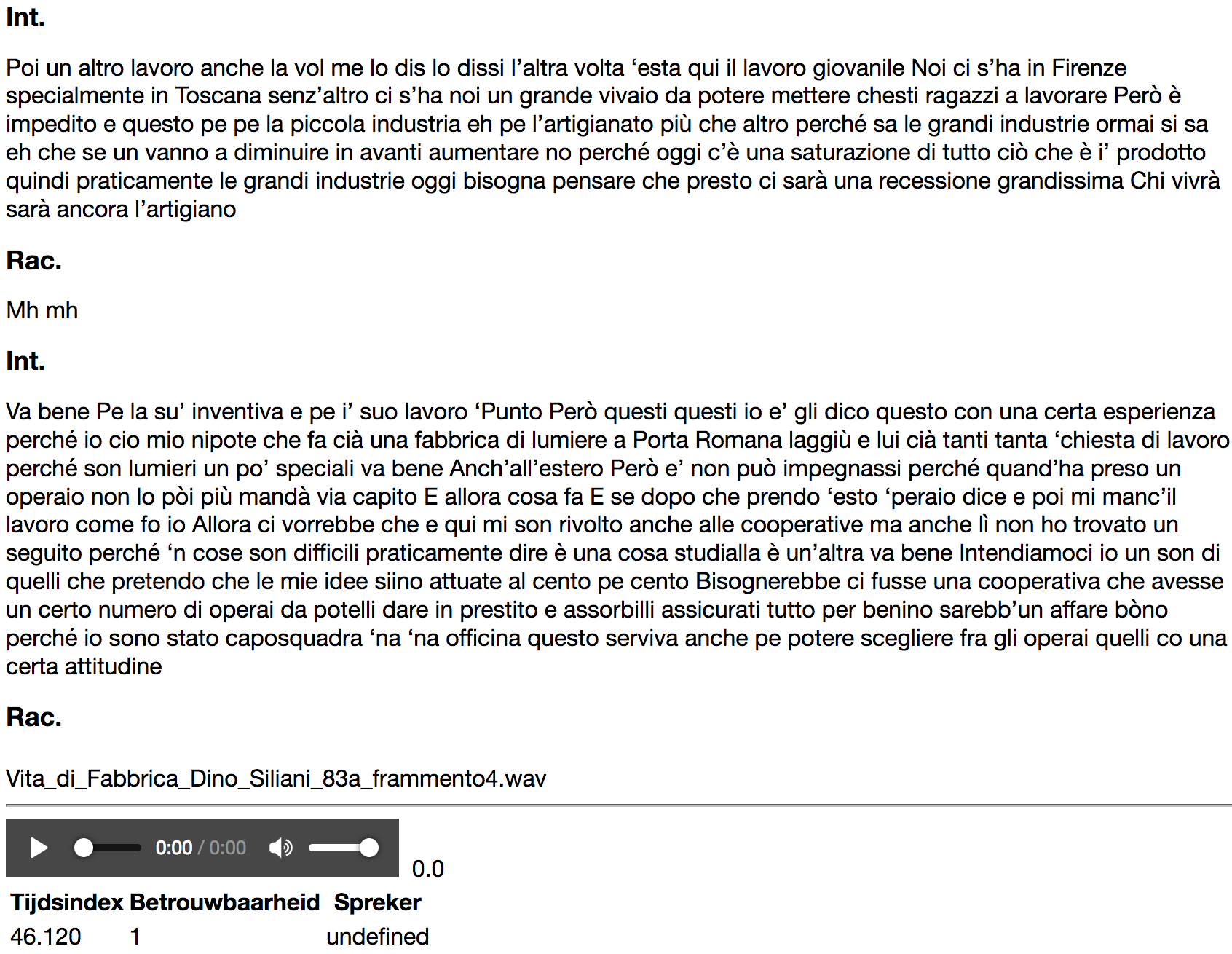

In fig 6 we see the original transcription and in fig. 7 the final result: a player that highlites the word spoken.

Fig. 7: Example of an Italian transcript with 4 speaker turns. Not all the words are "correct" Italian.

Fig. 7: Example of an Italian transcript with 4 speaker turns. Not all the words are "correct" Italian.

The text is in a Tuscany dialect and the words try to refelect this deviating speaking style.

Courtesy Silvia Calamai, Universitá di Arezzo

Fig. 8: The resulting "Karaoke" style transcription. The words can be "played" by clicking on a word. The time-information (start and end time of each word) from the forced aligment is saved but the look-and-feel is "copied" from the original transcription.

Fig. 8: The resulting "Karaoke" style transcription. The words can be "played" by clicking on a word. The time-information (start and end time of each word) from the forced aligment is saved but the look-and-feel is "copied" from the original transcription.

Problems

The software works well but there are some "issues". In the English transcriptions, ofte number are used (for example: "I saw 2 ladies of 36 years").

WebMAUS internally rewrites 2 → two and 36 → thirtysix. This is done because WebMAUS is basically a phonetic aligner and thus needs the numbers to be spelled out in order to do this "phonetic alignment". The same is true for abbreviations: mr. → mister etc.

But as a result, the application has to align 36 with thirtysix, and mr. to mister which makes the software much more complex.