Automatic Speech Recognition (ASR) is a sophisticated software that facilitates the conversion of recorded speech into written text, a process somewhat analogous to Optical Character Recognition (OCR), which translates text images into machine-readable characters.

From 2000 to 2018, ASR was largely propelled by classical Machine Learning technologies such as Hidden Markov Models. These models, despite their historical dominance, began to stagnate in accuracy, paving the way for innovative approaches driven by cutting-edge Deep Learning technology.

The advent of potent generative AI has brought about a seismic shift in this field. Presently, in 2023, the superior ASR models operate on an End-to-End (E2E) basis, where audio is transmuted directly into text in a single step. Noteworthy examples include the Wav2Vec-2 model by Facebook/Meta, unveiled in 2019, and the more recent Whisper by OpenAI, launched in September 2022. The latter, in particular, has shown exceptional performance by demonstrating the capacity to recognize approximately 100 different languages using a singular model.

Legacy speech recognition technologies, such as Kaldi, are progressively becoming obsolete. Although they may still have niche applications where their performance is acceptable, the unprecedented superiority of models like Wav2Vec-2 and Whisper negates the necessity to further invest in these dated ASR models.

Whisper, a new ASR engine

Whisper is an automatic speech recognition (ASR) system trained on 680,000 hours of multilingual and multitask supervised data collected from the web. The developer of Whisper, OpenAI, shows that the use of such a large and diverse dataset leads to improved robustness to accents, background noise and technical language. Moreover, it enables transcription in multiple languages, as well as translation from those languages into English.

Whisper is an automatic speech recognition (ASR) system trained on 680,000 hours of multilingual and multitask supervised data collected from the web. The developer of Whisper, OpenAI, shows that the use of such a large and diverse dataset leads to improved robustness to accents, background noise and technical language. Moreover, it enables transcription in multiple languages, as well as translation from those languages into English.

Available models and languages

There are five model sizes, four with English-only versions, offering speed and accuracy tradeoffs. Below are the names of the available models and their approximate memory requirements and relative speed.

| Size | Parameters | English-only model | Multilingual model | Required VRAM | Relative speed |

|---|---|---|---|---|---|

| tiny | 39 M | tiny.en |

tiny |

~1 GB | ~32x |

| base | 74 M | base.en |

base |

~1 GB | ~16x |

| small | 244 M | small.en |

small |

~2 GB | ~6x |

| medium | 769 M | medium.en |

medium |

~5 GB | ~2x |

| large | 1550 M | N/A | large |

~10 GB | 1x |

The .en models for English-only applications tend to perform better, especially for the tiny.en and base.en models. OpenAi observed that the difference becomes less significant for the small.en and medium.en models.

The 9 models are open-source and can be downloaded on your own computer.

From my perspective, Whisper currently stands as an exceptional, if not the leading, ASR engine available. It serves as an excellent foundation for developing practical applications and fostering further research in robust speech processing. Launched in September 2022, Whisper has garnered a substantial amount of positive feedback.

Privacy Concerns

Privacy becomes a paramount consideration, particularly when dealing with Audio-Visual (AV) recordings. This concern is further magnified when handling "sensitive" recordings, necessitating careful and responsible handling by the interviewer or the owner of the recordings. Whisper is highly versatile in terms of its operational capacities, capable of functioning on a high-performance server, a personal laptop, or any device in-between. It delivers consistent recognition results across different platforms, with the processing speed of Whisper being the primary variable depending on your computer's capabilities. The more powerful your device is, especially if equipped with a graphics card, the faster the recognition. Therefore, for those dealing with sensitive data and owning a fast computer, we strongly recommend installing it on your system to minimize the risk of data breaches.

Is ASR Fully Developed?

As of now, in June 2023, the answer is no. Whisper still has some areas for improvement. Drawbacks include the absence of diarization (identifying the speaker) and occasional over-simplification of transcriptions. For example, hesitant speech such as "I um I, I thought I'd do that for a moment" is typically recognized by Whisper as "I thought I'd do that for a moment".

This behavior likely results from Whisper's use of a ChatGPT-like language model to convert recognized speech into fluid sentences. While this feature is generally effective for transcribing most speech, it may not always be ideal for research focusing on speech or dialogues where the study of hesitations, pauses, repetitions, and other disfluencies is key.

Numerous researchers are diligently working to improve diarization and to facilitate a more literal transcription of speech. As soon as advancements are made and information on how to utilize them becomes available, we will provide updates here.

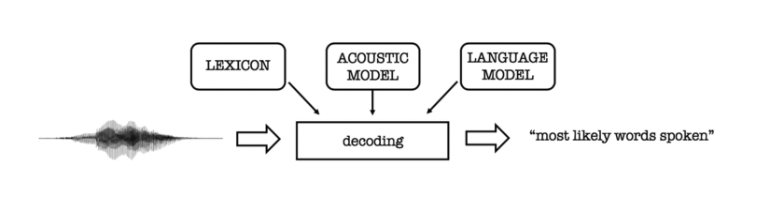

Old ASR

The traditional ASR systems are a combination of HMM (Hidden Markov Models) and GMM (Gaussian Mixture Models) require forced aligned data. Force alignment is the process of taking the text transcription of an audio speech segment and determining where in time particular words occur in the speech segment. As can be seen in the illustration below, this approach combines a lexicon model + an acoustic model + a language model to make transcription predictions.

Each step is defined in more detail below:

Lexicon Model

The lexicon model describes how words are pronounced phonetically. You usually need a custom phoneme set for each language, handcrafted by expert phoneticians.

Acoustic Model

The acoustic model (AM), models the acoustic patterns of speech. The job of the acoustic model is to predict which sound or phoneme is being spoken at each speech segment from the forced aligned data. The acoustic model is usually of an HMM or GMM variant.

Language Model

The language model (LM) models the statistics of language. It learns which sequences of words are most likely to be spoken, and its job is to predict which words will follow on from the current words and with what probability.

Decoding

Decoding is a process of utilizing the lexicon, acoustic, and language model to produce a transcript.

Downsides of Using the Traditional Hybrid Approach

Though still widely used, the traditional hybrid approach to Speech Recognition does have a few drawbacks. Lower accuracy is the biggest. In addition, each model must be trained independently, making them time and labor intensive. Forced aligned data is also difficult to come by and a significant amount of human labor is needed, making them less accessible. Finally, experts are needed to build a custom phonetic set in order to boost the model’s accuracy.

For more additional information, see here.